Puedes valorar ejemplos para ayudarnos a mejorar la calidad de los ejemplos. Estos son los ejemplos en Python del mundo real mejor valorados de xgboostsklearn.XGBRegressor extrados de proyectos de cdigo abierto. What is XGBoost XGBoost is the leading model for working with standard tabular data (as opposed to more exotic types of data like images and videos, the type of data you. from xgboost import XGBRegressor model XGBRegressor (objectivereg:squarederror, nestimators1000) model. Python XGBRegressor - 9 ejemplos encontrados. Scikit-Learn, the Python machine learning library, supports various gradient-boosting classifier implementations, including XGBoost, light Gradient Boosting, catBoosting, etc. Print 'blend_train.shape = %s' % (str(blend_train.shape)) Xtrain, Ytrain createdataset (train, lookback) Xtest, Ytest createdataset (test, lookback) The XGBRegressor is now fit on the training data. Print 'X_test.shape = %s' % (str(X_test.shape)) Skf = list(StratifiedKFold(Y_dev, n_folds, shuffle=True))īlend_train = np.zeros((X_dev.shape, len(clfs))) # Number of training data x Number of classifiersīlend_test = np.zeros((X_test.shape, len(clfs))) # Number of testing data x Number of classifiers The following is an example of exporting a model trained with CatBoostClassifier to Apple CoreML for further usage on iOS devices: Train the model and save it in CoreML format. GradientBoostingRegressor(n_estimators=500, max_depth=6, min_samples_split=1, min_samples_leaf=15, learning_rate=0.035, loss='ls',random_state=10),ĭecisionTreeRegressor(criterion='mse', splitter='random', max_depth=4, min_samples_split=7, min_samples_leaf=30, min_weight_fraction_leaf=0.0, max_features='sqrt', random_state=None, max_leaf_nodes=None, presort=False)

PYTHON XGBREGRESSOR EXAMPLE SERIES

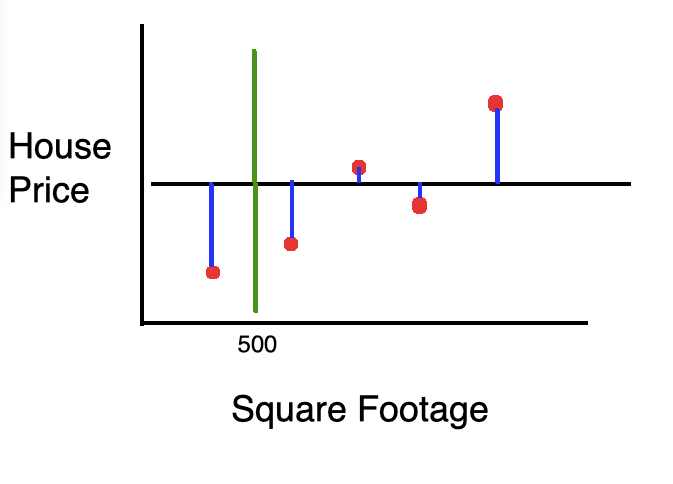

In the context of time series specifically, XGBRegressor uses the lags of the time series as features in predicting the outcome variable. Neighbors.KNeighborsRegressor(128, weights="uniform", leaf_size=5), XGBRegressor seeks to accomplish the same thing the only difference being that we are using this model to solve a regression problem, i.e. Min_samples_split=1, n_jobs= -1, random_state=2014),ĪdaBoostRegressor(base_estimator=None, n_estimators=250, learning_rate=0.03, loss='linear', random_state=20160703),īaggingRegressor(base_estimator=None, n_estimators=200, max_samples=1.0, max_features=1.0, bootstrap=True, bootstrap_features=False, oob_score=False, warm_start=False, n_jobs=1, random_state=None, verbose=0), For a new data point, make each one of your Ntree.

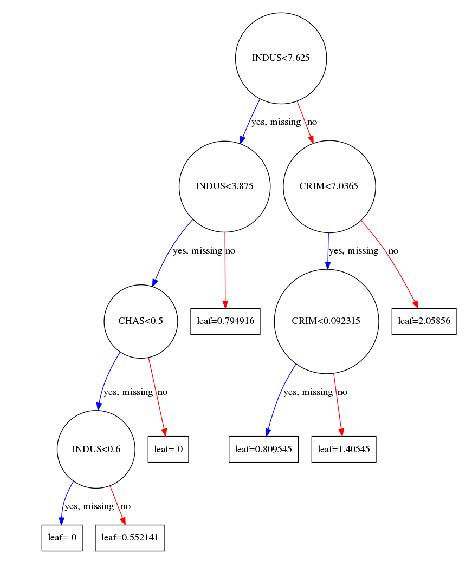

Choose the number N tree of trees you want to build and repeat steps 1 and 2. Build the decision tree associated to these K data points. This is a four step process and our steps are as follows: Pick a random K data points from the training set. RandomForestRegressor(n_estimators=500, max_depth=5, min_samples_leaf=6, max_features=0.9,\ Steps to perform the random forest regression. Xgb_params0=ĮxtraTreesRegressor(n_estimators = n_trees * 20),īaggingRegressor(base_estimator=xgb.XGBRegressor(**xgb_params0), n_estimators=10, random_state=np.random.RandomState(2016) ),

Source File: ensemble_script_random_version.py def run(X,Y,X2,bclf,iteration):

0 kommentar(er)

0 kommentar(er)